Slingshot is the Ethernet-based high-speed interconnect developed by Cray after its Aries interconnect. Slingshot runs at 200 Gbps and uses 64-port switches.

Cray released Slingshot in two phases:

- Slingshot-10 was Slingshot switches (codenamed Rosetta) combined with Mellanox ConnectX-5 100G NICs. The NICs talk RoCE up to the first switch, and inter-switch communication converts to using the proprietary “HPC Ethernet” protocol.

- Slingshot-11 introduced 200G Slingshot NICs (codenamed Cassini) to replace the ConnectX-5 NICs. In addition to doubling injection bandwidth to 200G, it allowed the HPC Ethernet protocol to extend all the way to the compute nodes rather than relying on the switches to translate between standard Ethernet and HPC Ethernet.

In November 2024, HPE also announced Slingshot 400, which sounds like a serdes upgrade to the Slingshot protocol with no major enhancements.1

Rosetta

Rosetta is a 64-port, 200G switch built using 56G serdes using PAM4 signaling. It was designed with dragonfly topology in mind, and at its maximum scale, supports 278,528 endpoints using 32 switches per group, each with:

- 16x L0 ports

- 31x L1 ports

- 17x L2 ports

which means a maximum scale of 545 groups, each with 512 endpoints.

Rosetta switches implement a crossbar using 32 tiles, each with two switch ports. The ASIC itself is built on TSMC 16 nm and consume 250W apiece.

Cassini

Cassini is the 200G NIC that can speak both HPC Ethernet and standard Ethernet. It supports hardware tag matching, offloading MPI progression, one-sided operations, and collectives.2 It is available in both Cray EX mezzanine form factor and standard PCIe AIC.

Software

Slingshot’s primary API is libfabric, although it has a low-level API called CXI which is what Slingshot’s fabric provider uses. Because libfabric really only provides a user-space API, Cray created kfabric so their ClusterStor E1000 Lustre appliances (whose clients use kernel-space Lustre clients) could use Cassini NICs for end-to-end RDMA.3

Protocol

Slingshot uses “HPC Ethernet” which is a modified version of standard Ethernet that increases packet rate and reduces latency:4

- Reduces 64-byte frame size to 40 bytes (or 32-byte payload with embedded sideband data) by reducing the preamble and allowing IP packets to be sent without an L2 header.

- Removed 12-byte (96-bit) inter-packet gap

- Uses credit-based flow control

- Implements forward error correction

- Implements link-level retry

Slingshot 400

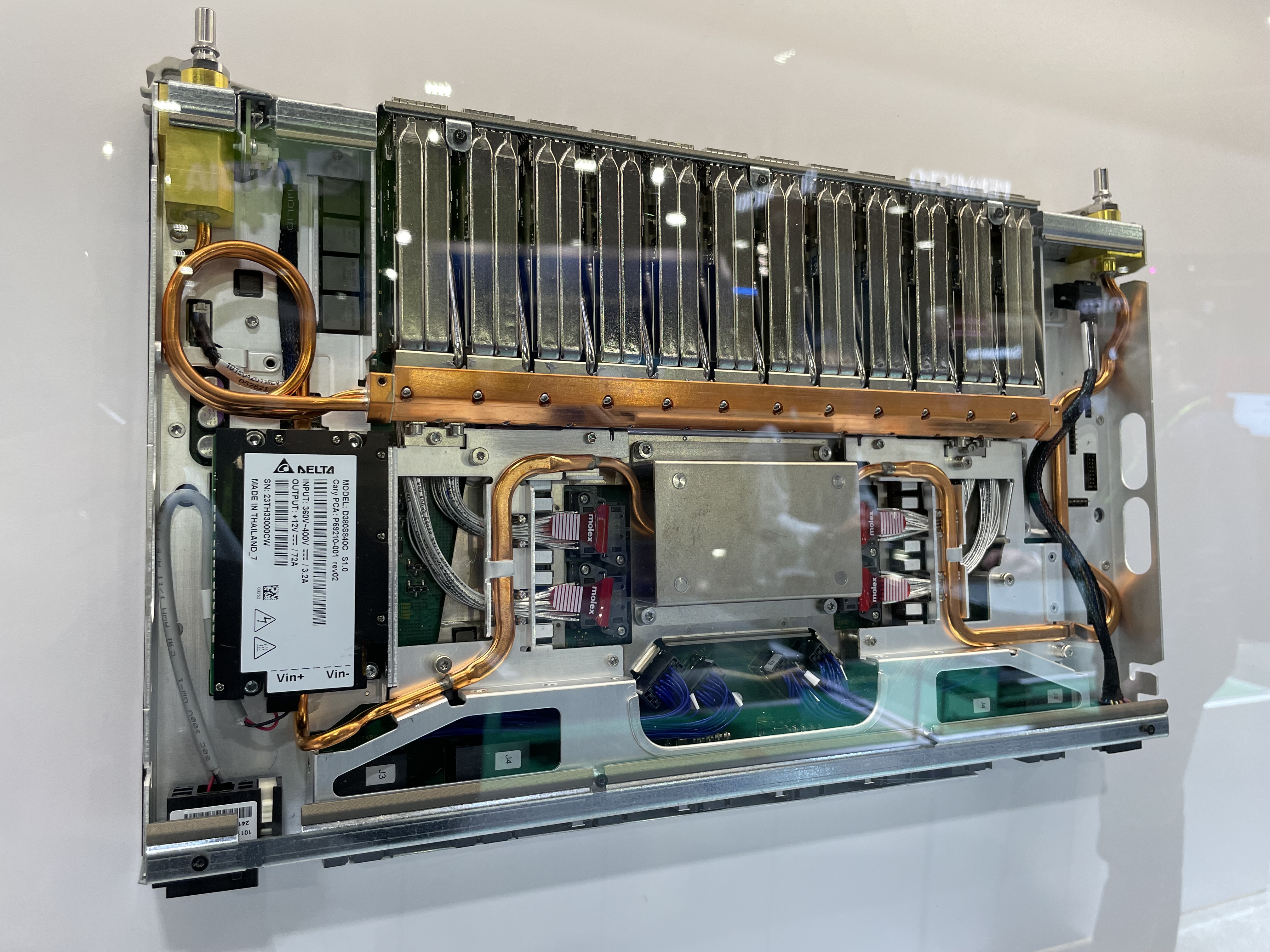

Here is a Slingshot 400 switch that was on display at SC24:

The placard under it said:

HPE Slingshot with 200 Gbps is powering the world’s exascale machines. The second generation is now available with 400 Gbps also as TOR switch with PCle NICs

- 64 port switch with 51.2 Tbps bi-directional bandwidth and 400 Gbps per port

- Matching PCle NICs with 400 Gbps per port

- End-to-end adaptive routing and congestion management for ultra-low latency

- Ultra Ethernet is the future, HPE Slingshot delivers today!

Footnotes

-

HPE expands direct liquid-cooled supercomputing solutions, introduces two AI systems for service providers and large enterprises | HPE ↩

-

Cray’s Slingshot Interconnect Is At The Heart Of HPE’s HPC And AI Ambitions (nextplatform.com) ↩

-

How Cray Makes Ethernet Suited For HPC And AI With Slingshot (nextplatform.com) ↩