Chips

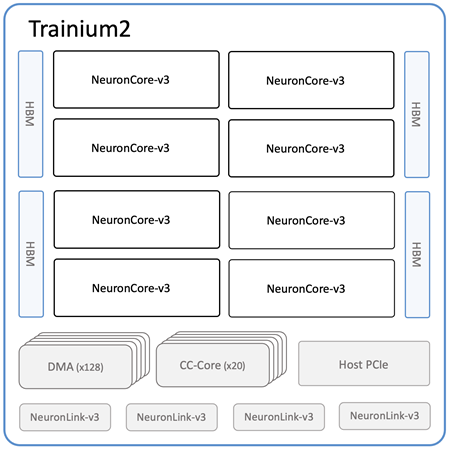

Trainium2 is Amazon’s in-house AI training accelerator. A single Trainium2 chip has:1

- 8 NeuronCore-v3 cores

- 96 GiB HBM (2.9 TB/s)

- 1.3 PF FP8 with support for 4:1 sparsity

Graphically:1

Trn2 Instances

Trainium2 chips are packaged into Trn2 instances, each with:

- 16 Trainium2 chips

- 128 NeuronCore-v3

- 1.5 TiB HBM

- 20.8 PF FP8

- 192 vCPUs

- 2 TiB DDR DRAM

- 3.2 Tbps of EFA v3

The NOC within a Trn2 instance is a “2D torus” (isn’t that a mesh?).

UltraServers

It seems like Trainium instances are packaged into rack-scale UltraServers, each containing for Trn2 instances.

Each UltraServer has

- 64 Trainium2 chips

- 512 NeuronCore-v3

- 6 TiB HBM

- 83 PF FP8

- 768 vCPUs

- 8 TiB DDR DRAM

- 12.8 Tbps of EFA v3

The toruses (meshes?) of a Trn2 instance within an UltraServer are connected in some kind of non-3D torus(?) The exact language is “cores at corresponding XY positions in each of the four instances are connected in a ring.”

Here is what an UltraServer looks like from the front:2

From this, it looks like each UltraServer has

- Two racks

- 64 (8x4) individual nodes

- Cross-rack cabling

Here is a closer-up view of what one of those nodes looks like:3

Zooming in, the labeling implies

- There are two “GPUs” per physical sled with “left” and “right” ports.

- There are external PCIe ports

The above photo of a node doesn’t exactly look like what is in the idealized two-rack UltraServer diagram though.

This is what an UltraServer looks like from the back:3

UltraCluster

AWS announced that it will build its next flagship AI training cluster using Trainium, and Anthropic will be the customer for it.3 This cluster, Rainier, will be located in the continental US and will have “hundreds of thousands” of Trainium2 accelerators.