Microsoft uses SmartNICs in all of its Azure servers to offload SDN functions since 2015.1 Microsoft has historically implemented these NICs as a bump-in-the-wire FPGA sitting between a standard host NIC (like a Mellanox ConnectX-5) and the network cable that connects up to a switch.1

History

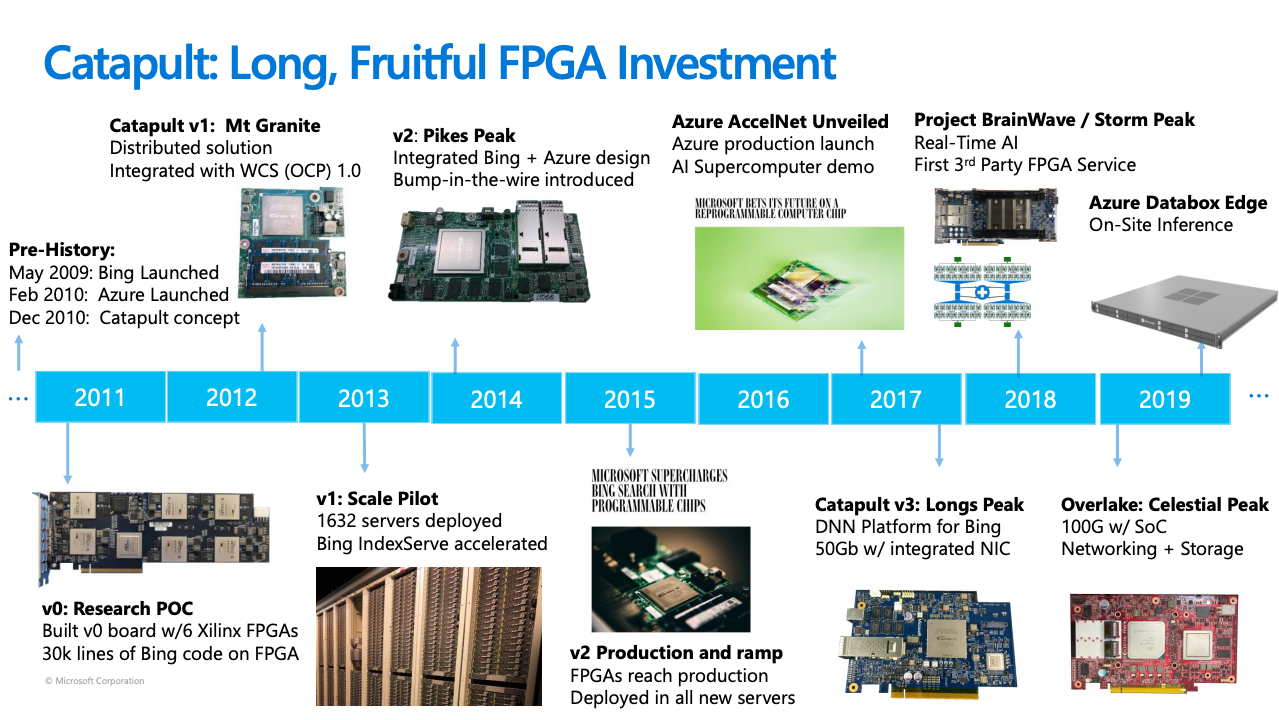

Microsoft’s Project Catapult was a research program in Microsoft Research that used network-attached FPGAs to accelerate distributed computations for Bing. That effort built muscle within Microsoft which then informed Azure’s SmartNIC program which is detailed in SmartNICs in the Public Cloud.

Project Catapult resulted in network-attachable FPGA hardware and techniques to program them which were then adopted by a different part of Microsoft to create SDN accelerators within NICs. As a result, some of the physical NICs have multiple names depending on their application area, and there are different generational numbering schemes in flight which get confusing.

This is an interesting history slide presented at Fermilab in 2022:

The above graphic is summarized below:

| Speed | Research name | SmartNIC name | FPGA |

|---|---|---|---|

| 40G | Catapult v2 | Pikes Peak | Altera Stratix V1 |

| 50G | Catapult v3 | Longs Peak | Altera Arria 102 |

| 100G | Project Brainwave | Storm Peak | Altera Stratix 102 |

| 100G | Overlake | Celestial Peak | Altera Stratix 103 |

| 200G | MANA |

Microsoft had an Azure HBv4 with a “second-generation”4 80G NIC on display at SC22 as well,5 showing the same model number (A-2040) as Celestial Peak.3 When you use this node, it appears as a ConnectX-5 Ethernet adapter and does not need any special drivers, consistent with older generations that combined the FPGA with a Mellanox NIC.

MANA

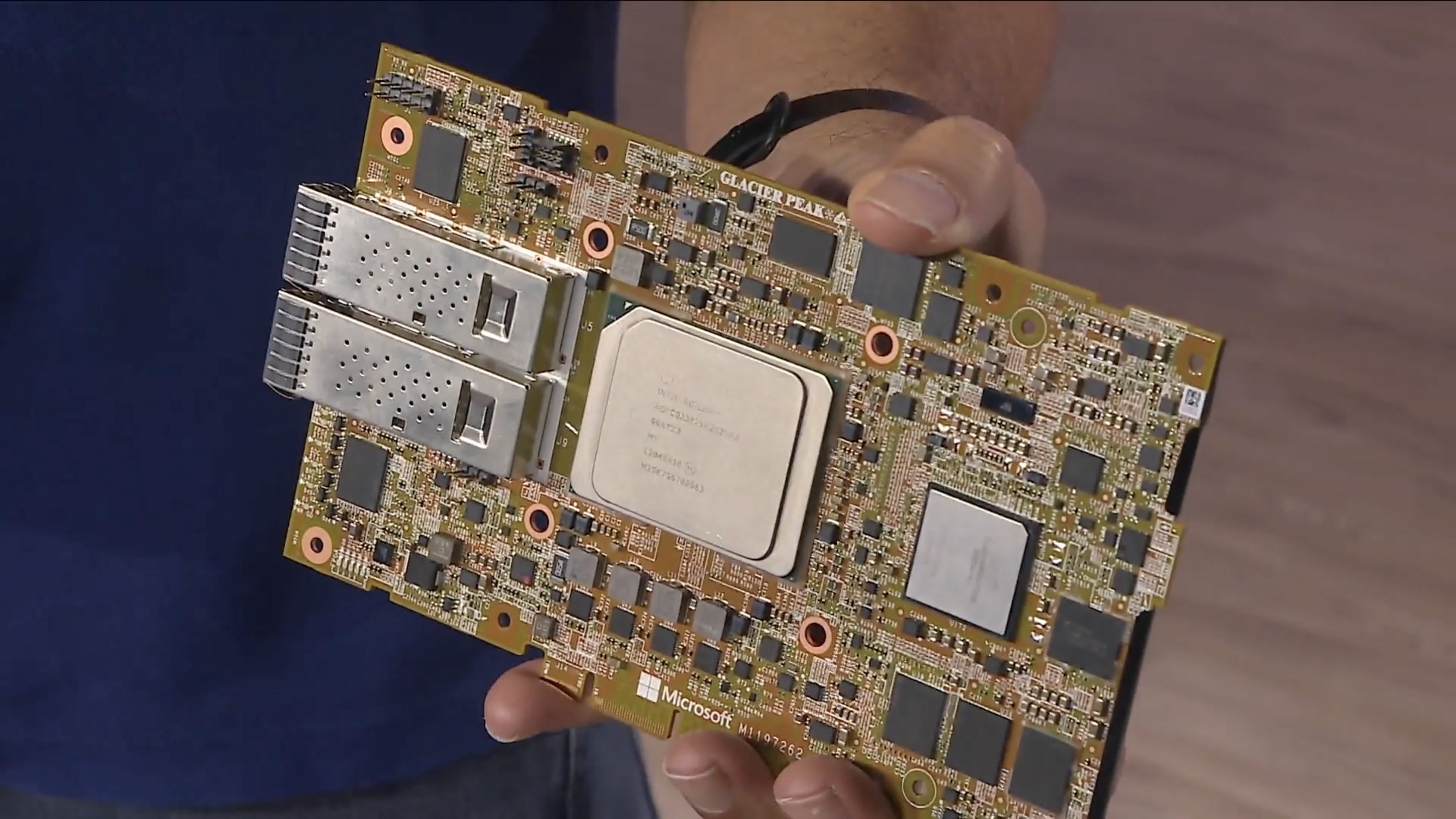

The Microsoft Azure Network Adapter (MANA) is a 200G SmartNIC6 that was unveiled at Build 2022.7 It is built on an undisclosed(?) FPGA and an Arm SoC running Microsoft Linux and is built on a single card as shown by Mark Russinovich:

These NICs are implemented entirely by Microsoft and require a special RDMA driver which has been upstreamed to Linux.8 Funny enough, SmartNICs in the Public Cloud considered this too much work:

Quote

Another option was to build a full NIC, including SR-IOV, inside the FPGA — but this would have been a significant undertaking (including getting drivers into all our VM SKUs), and would require us to implement unrelated functionality that our currently deployed NICs handle, such as RDMA.

Second-hand

For some reason, these older NICs often appear for sale on eBay, but they aren’t immediately useable when plugged into standard servers.3 For example, searching for “Microsoft A-2040” on eBay brings up a number of Celestial Peak NICs for sale from China. As a result, there’s a bunch of speculative knowledge online around this hardware.

Given the tight integration between these FPGAs and the Azure hypervisor described in SmartNICs in the Public Cloud, it’s not surprising these cards don’t function on their own.

Footnotes

-

See https://www.microsoft.com/en-us/research/uploads/prod/2017/08/HC29.22622-Brainwave-Datacenter-Chung-Microsoft-2017_08_11_2017.compressed.pdf which is linked from https://www.microsoft.com/en-us/research/blog/microsoft-unveils-project-brainwave/ ↩ ↩2

-

Move on to the new toy, Celestial Peak. How can we RE it ? · Issue #2 · tow3rs/catapult-v3-smartnic-re · GitHub ↩ ↩2 ↩3

-

HBv4-series VM overview, architecture, topology - Azure Virtual Machines - Azure Virtual Machines | Microsoft Learn ↩

-

Microsoft Azure at SC22 and The Enormous AMD EPYC Genoa Heatsink on HBv4 (servethehome.com) ↩

-

Microsoft Build 2023 Inside Azure Innovations - Infrastructure acceleration through offload ↩

-

net: mana: Add a driver for Microsoft Azure Network Adapter (MANA) - kernel/git/netdev/net-next.git - Netdev Group’s -next networking tree ↩