B300, or Blackwell Ultra, is NVIDIA’s follow-on to its B200 GPU. It will have similar power consumption to B200 but is new silicon.

Each GPU will have1

- 2 reticle-sized GPUs

- 288 GB HBM3e

- Essentially no FP64 performance

- Upgrade to PCIe Gen6

- Upgrade to 1400 W (from 1200W)

- Upgrade to 12-high HBM3e stacks (from 8-high)

Specifically, the “full implementation” (not taking into account deactivated components) has:2

- 8 GPCs

- 160 SMs (20 per GPC)

- 20,480 CUDA cores (128 per SM)

- 640 tensor cores (4 per SM)

- ? GHz (low precision), ? GHz (high precision)

- 2:4 structured sparsity

- 288 GB HBM3e (8 stacks, 12-high)

- 8 TB/s (max)

- 2x 900 GB/s NVLink 5 (D2D)

- 2x 256 GB/s PCIe Gen6 (H2D)

- 1400 W maximum

It is clearly a part optimized for inferencing.

Performance

The HGX variant of B300 has similar performance to B200:3

| Data Type | VFMA | Matrix | Sparse |

|---|---|---|---|

| FP64 | 1.25 | 1.25 | |

| FP32 | 75 | ||

| TF32 | 1125 | 2250 | |

| FP16 | 2250 | 4500 | |

| BF16 | 2250 | 4500 | |

| FP8 | 4500 | 9000 | |

| FP6 | 4500 | 9000 | |

| FP4 | 13125 | 18000 | |

| INT8 | 4500 | 9000 |

The biggest differences are:

- Dense FP4 performance is up (13 PF on B300 vs. 9.0 PF on B200)

- FP64 performance is dramatically lower (1.25 TF on B300 vs. 37 TF on B200)

GB300 NVL72

GB300 NVL72 is advertised to have:1

- 1.5x GB200 NVL72

- 1.1 EF dense FP4 for inference

- 360 PF FP8 for training

- 20 TB HBM at 576 TB/s

- 40 TB “Fast Memory”

- 18 NVLink Switches at 130 TB/s

- 130 trillion transistors

- 2,592 Grace CPU cores

- 72 ConnectX-8 NICs

- 18 BlueField DPUs

GB300 NVL72 maintains compatibility with the GB200 NVL72 Oberon rack but has a few notable differences:

- It is a single carrier board with two Grace CPUs and four Blackwell GPUs instead of two identical boards with 1C:2G each.

- Mellanox ConnectX-8 NICs are included on the board now

- CPUs, GPUs, and NICs are all socketed and individually replaceable now

- The server is designed to be 100% liquid-cooled

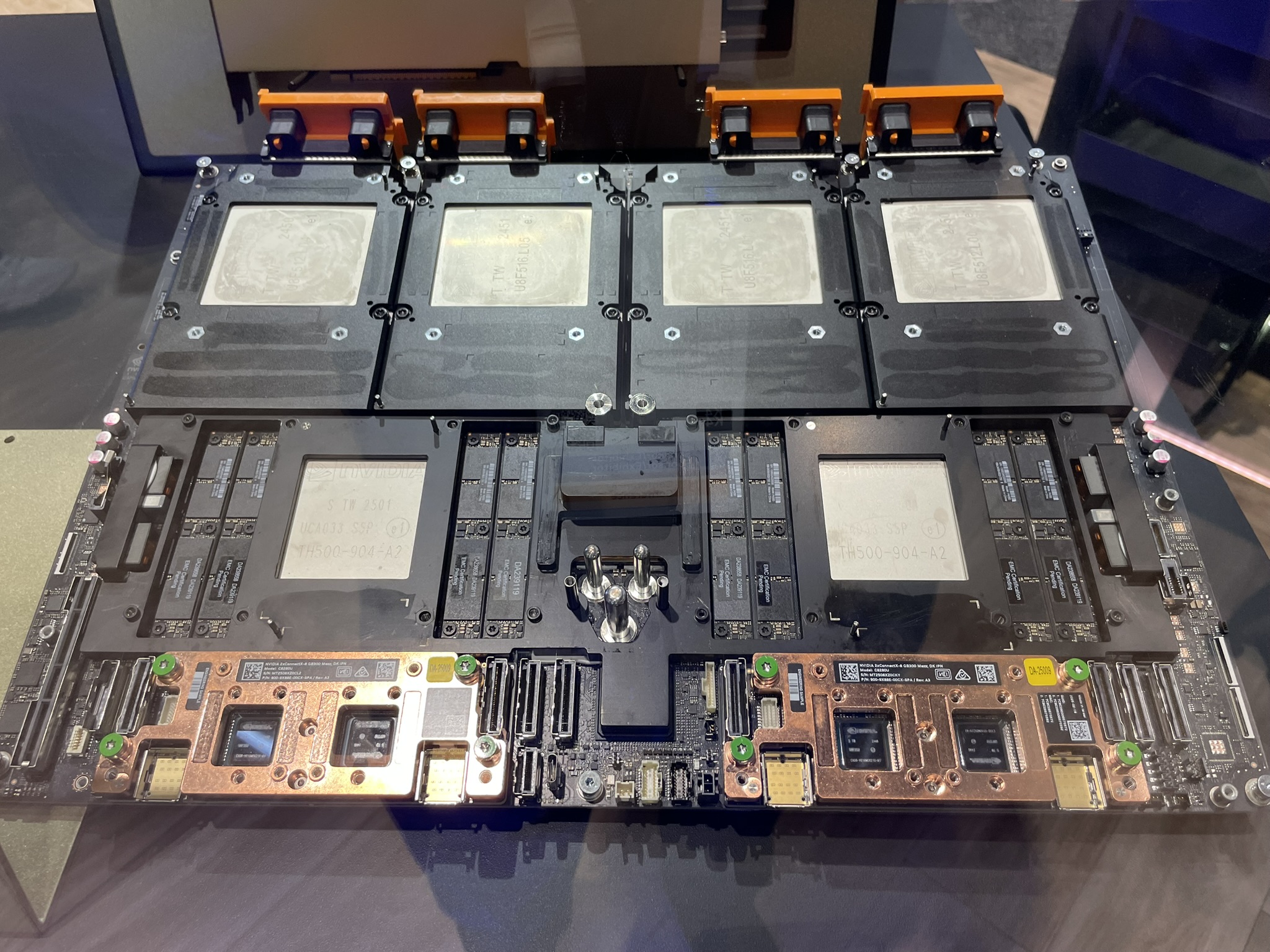

Here is a photo of the GB300 NVL72 superchip board I took at GTC25:

From top to bottom are the NVLink connectors (orange), four B300 GPUs, two Grace CPUs, and four ConnectX-8 NICs (copper).

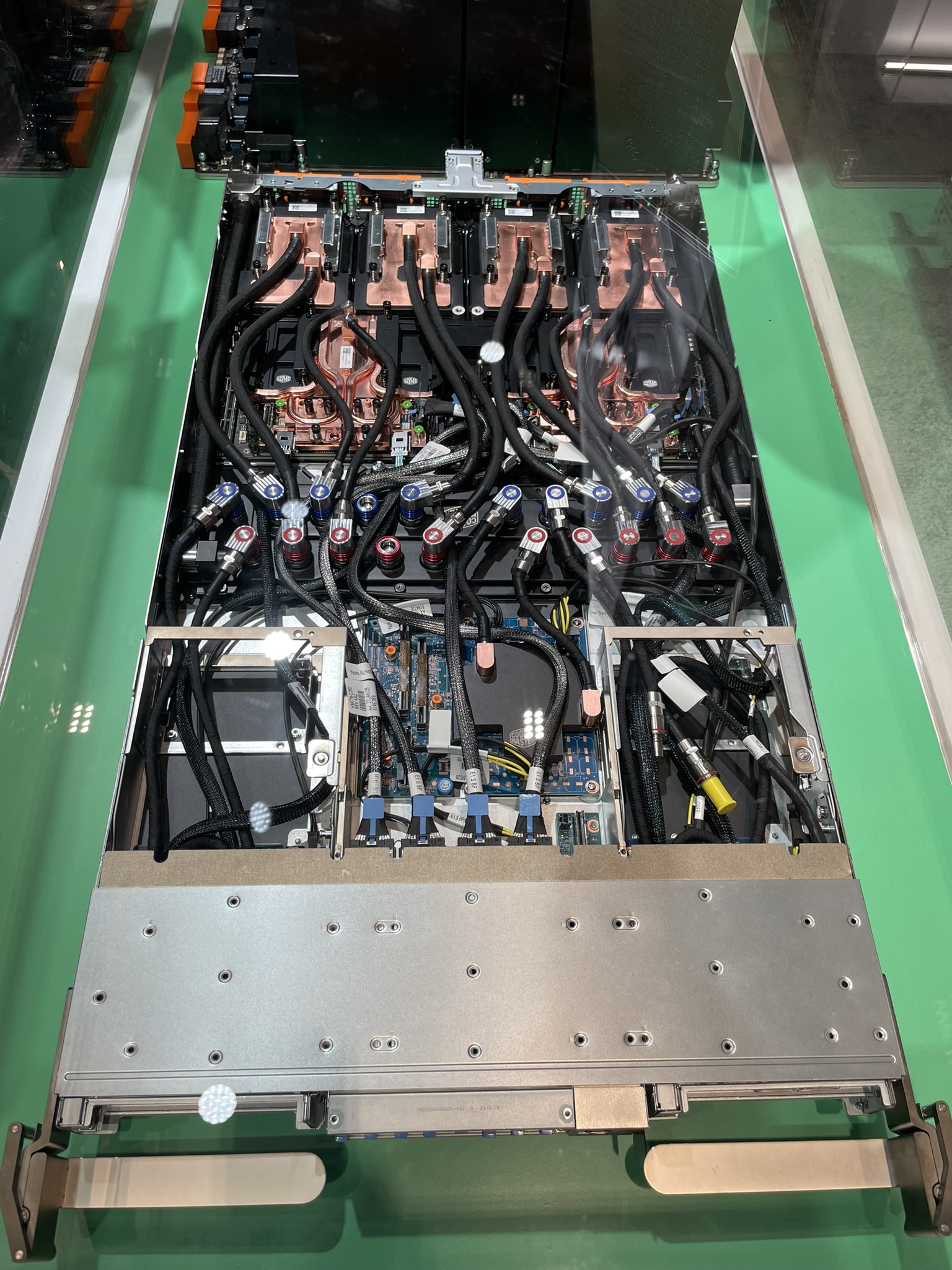

Here is HPE’s implementation of the GB300 NVL72 server sled:

A liquid manifold replaces the row of fans that was in the GB200 NVL72 server platform.

It uses the same rack power and liquid infrastructure, fits within the same rack power density, and maintains 72 GPUs per NVLink domain.4 It debuted at GTC25.

The photo below shows the DGX GB300 (left) and DGX GB200 (right). They are indistinguishable.

-and-DGX-GB200-(right).jpeg)

A single GB300 NVL72 rack is rumored to cost between $3.7 and $4 million.5