B200 is NVIDIA’s top-shelf Blackwell-generation datacenter GPU. Each GPU has:

- 10 GPCs1

- 160 SMs (20 per TPC2)

- 148 active3

- 80 per die

- 20,480 FP32 cores (128 per SM)

- 640 tensor cores (4 per SM)

- ? GHz (low precision), ? GHz (high precision)

- 2:4 structured sparsity

- 160 SMs (20 per TPC2)

- 186 GB HBM3e (8 stacks)4

- 8 TB/s (max)

- 2x 900 GB/s NVLink 5 (D2D)5

- 2x 256 GB/s PCIe Gen6 (H2D)5

- 1200 W maximum

B100 GPUs are a lower-power variant of B200 (700W) that is meant to be a “drop-in replacement” for HGX H100 platforms.6 That is, you can take a server platform built for 8-way H100 baseboards, swap in B100 baseboards, and sell them without having to re-engineer power or thermals.

Performance

The following are theoretical maximum performance in TFLOPS for the GB200 variant of B200 when configured to 1,200 W:6

| Data Type | VFMA | Matrix | Sparse |

|---|---|---|---|

| FP64 | 45 | 45 | |

| FP32 | 90 | ||

| TF32 | 1250 | 2500 | |

| FP16 | 2500 | 5000 | |

| BF16 | 2500 | 5000 | |

| FP8 | 5000 | 10000 | |

| FP6 | 5000 | 10000 | |

| FP4 | 10000 | 20000 | |

| INT8 | 5000 | 10000 |

The HGX variant of B200 supports the following when configured at 1,000 W:67

| Data Type | VFMA | Matrix | Sparse |

|---|---|---|---|

| FP64 | 37 | 37 | |

| FP32 | 75 | ||

| TF32 | 1125 | 2250 | |

| FP16 | 2250 | 4500 | |

| BF16 | 2250 | 4500 | |

| FP8 | 4500 | 9000 | |

| FP6 | 4500 | 9000 | |

| FP4 | 9000 | 18000 | |

| INT8 | 4500 | 9000 |

GB200

GB200 is a combination of Grace CPUs and B200 GPUs in a single coherent memory domain. There are a couple of variants which are described below.

GB200 NVL4

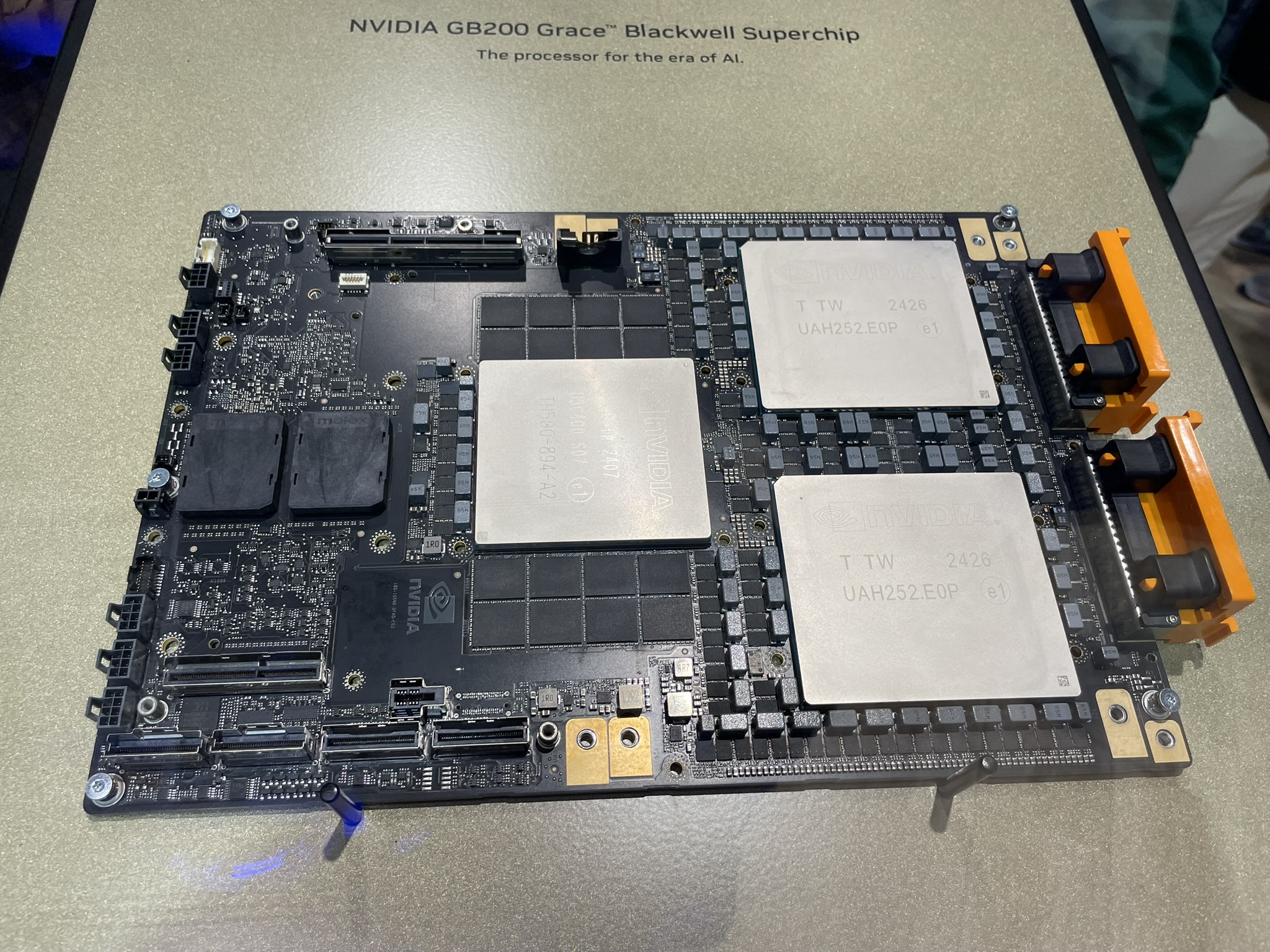

GB200 NVL4 is a single board with two Grace CPUs and four Blackwell GPUs, all soldered down. This is a photo of one shown at SC24.

This variant only supports four B200 GPUs per NVLink domain and appears to be the preferred choice for traditional scientific computing workloads which do not need (nor can afford) the rack-scale NVL72 domain.

Further evidence of this affinity for HPC is the Cray EX154n blade, which puts this NVL4 node in the Cray EX form factor.

GB200 NVL72

GB200 NVL72 is the rack-scale implementation of GB200 which connects 72 B200 GPUs using a single NVLink domain. It is composed of:

- GB200 superchip boards, each with two B200 GPUs and one Grace CPU

- Server sleds, each containing two GB200 superchip boards

- NVLink Switch sleds, each containing two NVLink 5 Switch ASICs

- NVLink cable cartridges

- Power shelves to power the rear bus bar

- Liquid cooling manifolds

A single GB200 NVL72 rack is purposed to cost around $3 million.8

The rack is referred to as the Oberon rack.9

The 1C:2G GB200 superchip looks like this:

From left to right are one Grace CPU surrounded by LPDDR5X, two B200 GPUs, and the NVLink connectors (orange) which mate with the cable cartridges. Two of the above superchips fit in a single server sled.

There are two variants of the rack-scale architecture:

- 1 rack with 72 GPUs (18 server trays) and 9 NVLink Switch trays. This consumes around 120 kW per rack and is the mainstream variant.

- 2 racks, each with 36 GPUs (9 server trays)

The two-rack variant requires ugly cross-rack cabling in the front of the rack to join each rack’s NVLink Switches into a single NVL72 domain. This two-rack form factor exists for datacenters that cannot support 120 kW racks.

Server sleds and NVLink Switch sleds are interconnected using four rear-mounted cable cartridges, illuminated in the below mockup of the DGX GB200 on display at GTC25:

See GB200 NVL72 | NVIDIA for more information.

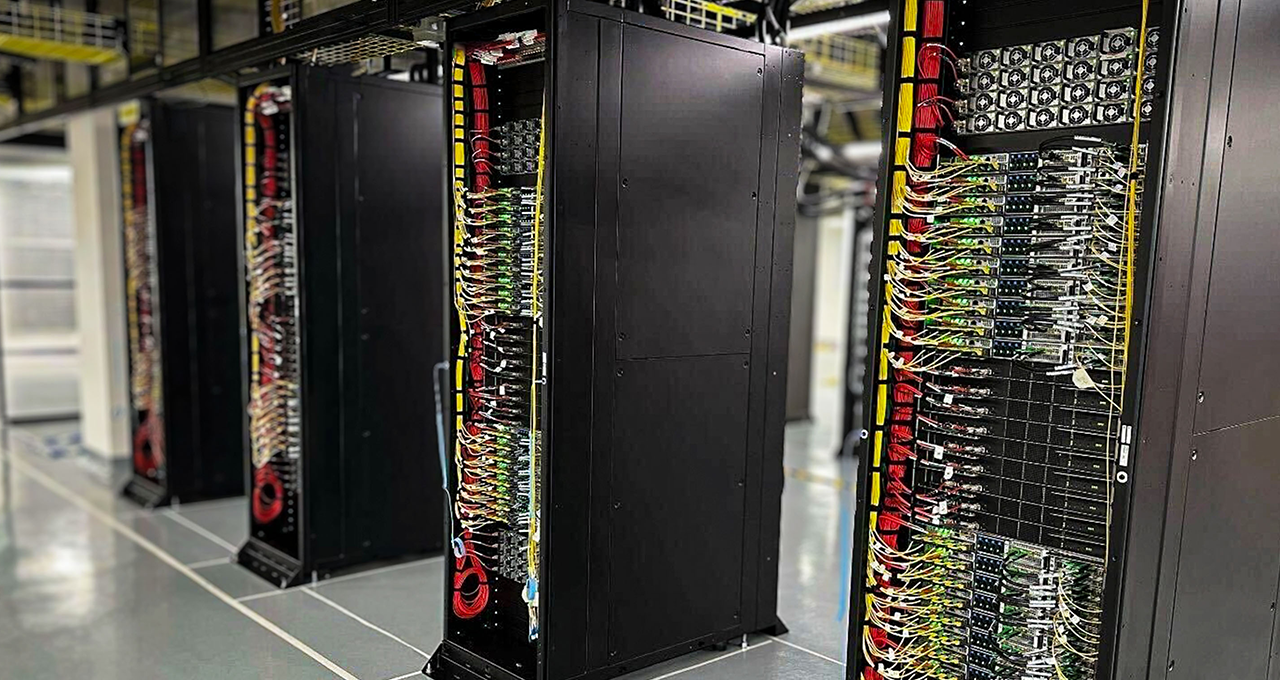

Hyperscale GB200 NVL72 has to be deployed sparsely because it is still partially air-cooled. OCI has shared the following photo of one of their deployments:10

You can see blank tile space between each rack; this is required to ensure that each rack has sufficient ambient air volume to cool the non-liquid parts of each rack. Microsoft has shown a similar datacenter layout for a GPU cluster as well.11