Kyber is the successor to NVIDIA’s Oberon rack design for its scale-up GPU configurations. It will first appear for VR300 NVL576.1

From Nvidia’s Jensen Huang, Ian Buck, and Charlie Boyle on the future of data center rack density:

Quote

Kyber also includes a rack-sized sidecar to handle power and cooling. Therefore, while it is a 600kW rack, it requires two racks’ worth of physical footprint, at least in the current version shown by Nvidia.

NVIDIA revealed their goal to develop 800 VDC row-scale power distribution to power these Kyber racks at COMPUTEX 2025.2

Architectural breakdown

The following commentary about the Kyber rack comes from photos I took at GTC25 and my subsequent GTC 2025 Recap blog post.

At a glance, each Kyber rack has four compute chassis, each with

- Eighteen front-facing, vertically mounted compute blades. Each blade supports “up to” sixteen GPUs and two CPUs each. I presume a “GPU” is a reticle-limited piece of silicon here.

- Six (or eight?) rear-facing, vertically mounted NVLink Switch blades. All GPUs are connect to each other via a nonblocking NVLink fabric.

- A passive midplane that “eliminates two miles of copper cabling.”

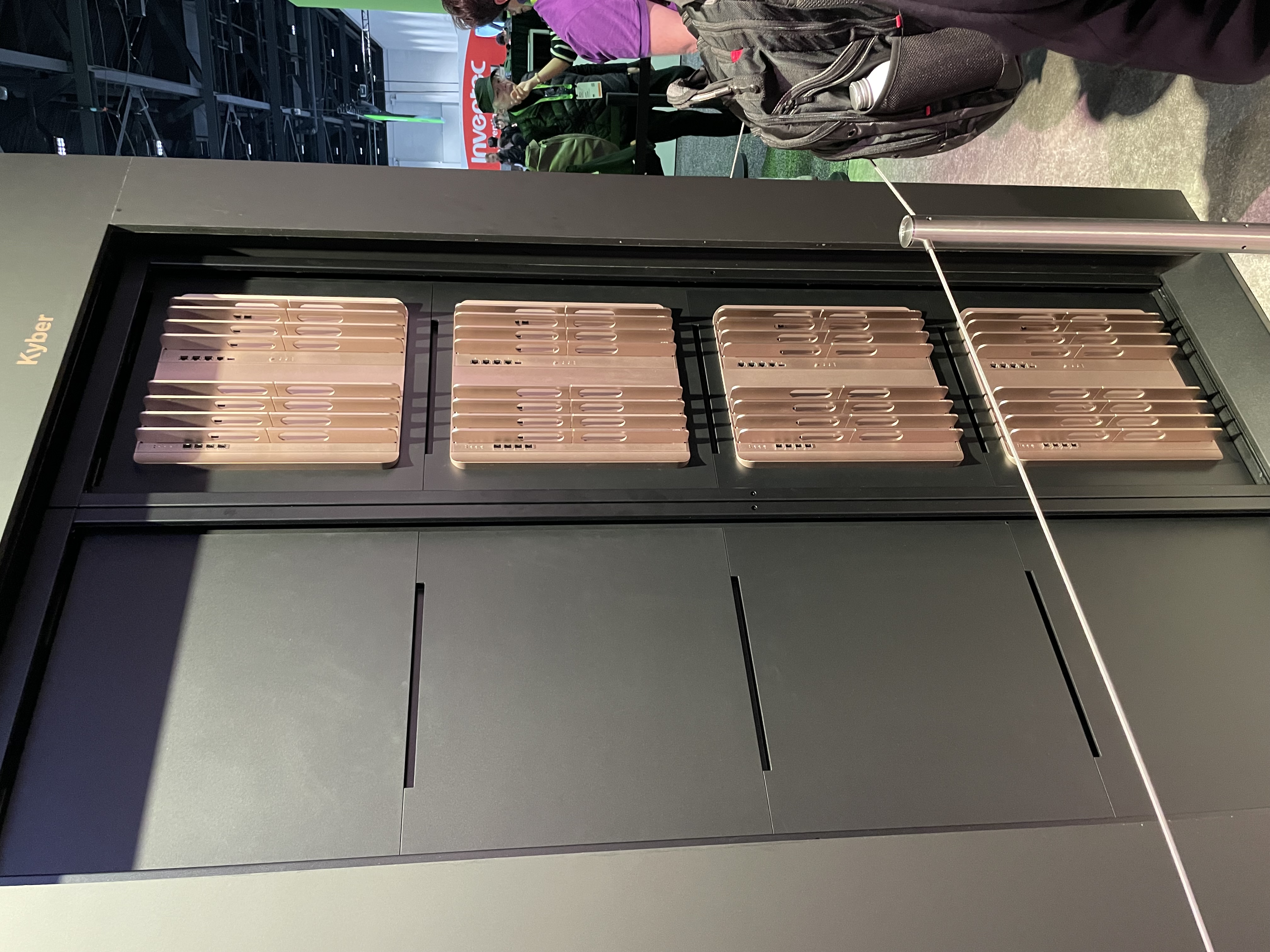

Here is a photo of the rear of a Kyber rack, showing all four chassis:

Examining one of those chassis closely, you can see two groups of four blades:

Six of these blades are identical NVLink Switch blades, but it wasn’t clear what the other two were. It looks like the odd two blades have SFP+ ports, so my guess is that those are rack controller or management blades.

The front of the Kyber rack shows the row of eighteen compute blades in each chassis:

Given there are four chassis per rack, this adds up to 72 compute blades per rack and NVLink domain. This means there are 8 GPUs per compute blade; given that Rubin Ultra will have four GPUs per package, it follows that each compute blade has two GPU packages. However, a placard next to this display advertised “up to 16 GPUs” per compute blade, so I’m not sure how to reconcile those two observations.

From the above photo, you can see that each blade also has front-facing ports. From the top to the bottom, there appear to be

- 4x NVMe slots, probably E3.S

- 4x OSFP cages, probably OSFP-XD for 1.6 Tb/s InfiniBand links

- 2x QSFP-DD(?) ports, probably for 800G BlueField-4 SuperNICs

- 1x RJ45 port, almost certainly for the baseboard management controller

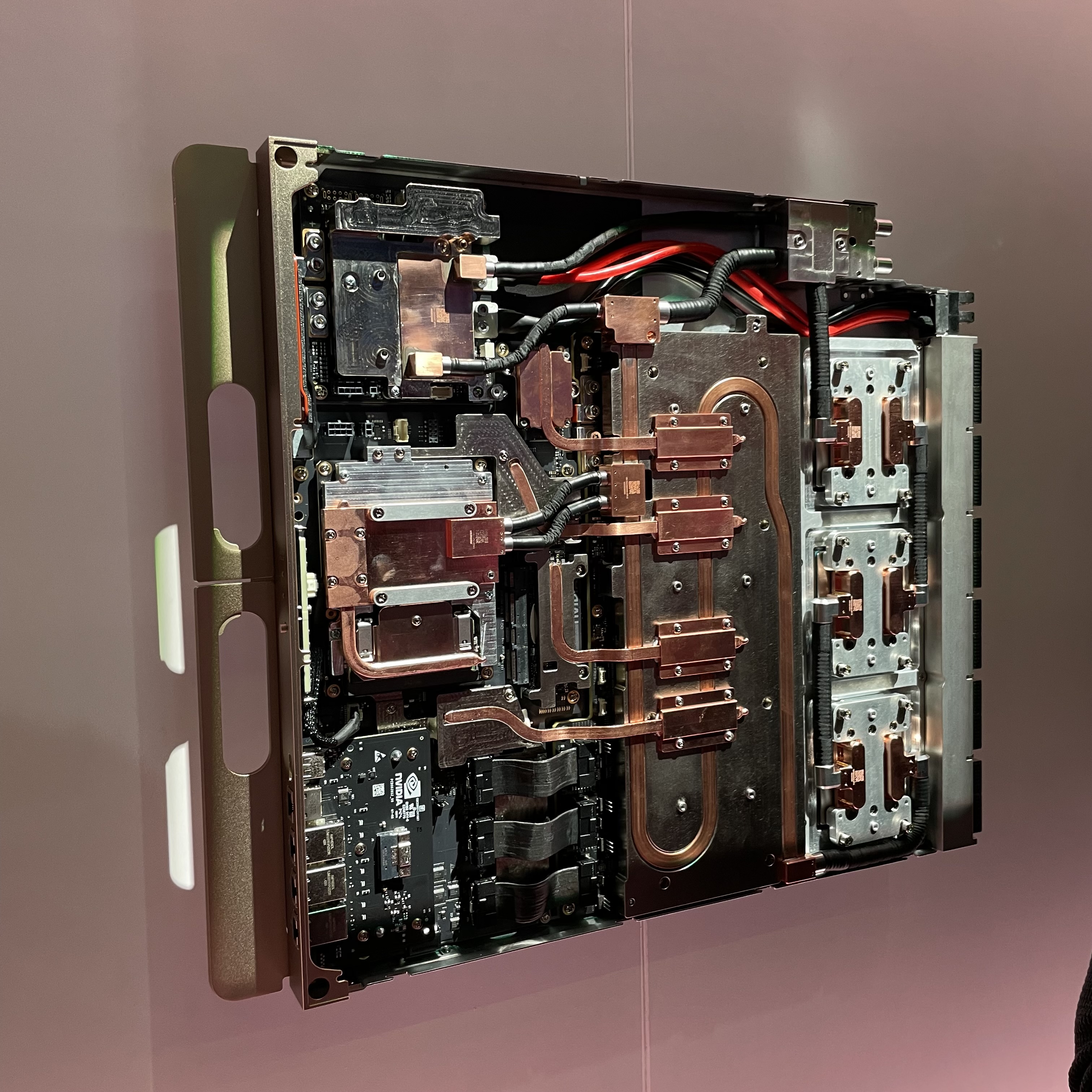

NVIDIA also had these blades pulled out for display. They are 100% liquid-cooled and are exceptionally dense due to all the copper heat pipes needed to cool the transceivers and SSDs:

The front of the blade, with the liquid-cooled SuperNIC (top), InfiniBand transceivers (middle), and SSDs (bottom), is on the left. Four cold plates for four Rubin GPUs are on the right, and two cold plates for the Vera CPUs are to the left of them. I presume the middle is the liquid manifold, and you can see the connectors for the liquid and NVLink on the right edge of the photo.

A copper midplane replaces the gnarly cable cartridges in the current Blackwell-era Oberon racks:

This compute-facing side of the midplane has 72 connector housings (4 18), and each housing appears to have 152 pins (19 rows, 4 columns, and two pins per position). This adds up to a staggering 10,000 pins per midplane, per side. If my math is right, this means a single Kyber rack will have over 87,000 NVLink pins.

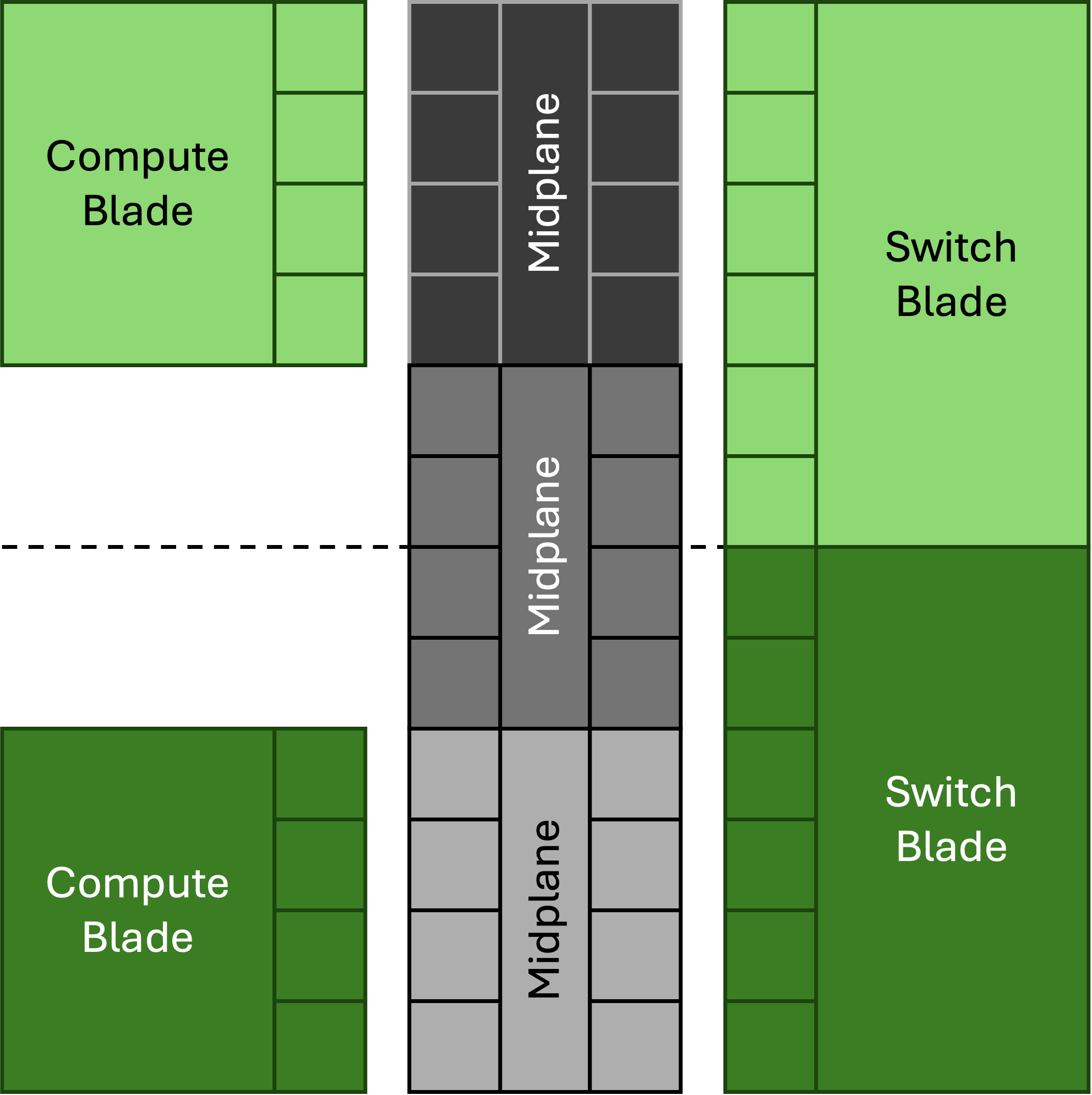

Although the midplane has cams to help line up blades when they’re being seated, these sorts of connectors freak me out due to the potential for bent pins. Unlike Cray EX (which mounts compute blades at a right angle to switch blades to avoid needing a midplane entirely), Kyber mounts both switches and compute vertically. This undoubtedly requires some sophisticated copper routing between the front-facing and rear-facing pins of this midplane.

It’s also unclear how NVLink will connect between the four chassis in each rack, but the NVLink Switch blade offers a couple clues on how this might work:

Each switch blade has six connector housings on the right; this suggests a configuration where midplanes are used to connect to both compute blades (with four housings per blade) and to straddle switch blades (with two housings per switch):

It’s unlikely that the 7th generation NVLink Switches used by Vera Rubin Ultra will have 576 ports each, so Kyber probably uses a two-level tree of some form.

In addition to the NVLink fabric, there were a lot of details missing about this Kyber platform:

- A sidecar for both power and cooling was mentioned, but the concept rack had this part completely blanked out. It is unclear how many rectifiers, transformers, and pumps would be required here.

- There wasn’t much room in the compute rack for anything other than the Kyber chassis; InfiniBand switches, power shelves, and management may live in another rack.

- It wasn’t clear how liquid and power from the sidekick would be delivered to the compute rack, but with 600 kW, cross-rack bus bars are almost a given. I’d imagine this changes the nature of datacenter safety standards.

- Although NVLink is all done via midplane, all the InfiniBand and frontend Ethernet is still plumbed out the front of rack. This means there will be 288 InfiniBand fibers and 144 Ethernet fibers coming out the front of every rack. For multi-plane fabrics, this will almost undoubtedly require some wacky fiber trunks and extensive patch panels or optical circuit switching.

However, seeing as how this was just a concept rack, there’s still a few years for NVIDIA and other manufacturers to work this stuff out. And notably, Jensen went out of his way during the keynote to say that, although NVIDIA is committed to 576-way NVLink domains for Rubin Ultra, they may change the way these 576 dies are packaged. This leaves plenty of room for them to refine this rack design.

Here is some commentary that resulted from my GTC recap posted to Substack:

From Tanj

On the network blade the 6 connectors are not the same size each as the 4 connectors on the GPU blade. Also there are 6 switch blades for 18 GPU blades? That suggests the connectors are a different density - double density and 1.5x number of connectors?

Reply from amnon izhar:

the GPU sleds are slimmer and only show 4 diff pairs per row vs the switch sleds are thicker can support 8 diff pairs per row. The 1.5 multiplier is because the switch blade shows 3 NVlink switch per blade vs the current NVL72 that supports 2 NVLink switches per sled.