Warning

Although I work for VAST Data at the time of writing, I have no inside knowledge about ICMS. The notes that follow are what I’ve collected from public sources, since that’s all that I have access to.

NVIDIA’s Inference Context Management Storage (ICMS) appears to be an architectural blueprint whereby pooled NVMe is attached to the frontend (north-south) network of a VR200 superpod (which NVIDIA calls a pod?) before the network taper.1 Specifically,

- NVMe SSDs live in “ICMS flash enclosures”

- ICMS flash enclosures which are attached to the Ethernet-based “AI-optimized RDMA fabric”.2

- This is the GPU cluster’s frontend (north-south) network.

- ICMS flash enclosures and GPU nodes have nonblocking connectivity.

- Each GPU server has a BlueField-4 DPU which also connects to the “AI-optimized RDMA fabric.”

- Control logic runs in the BlueField-4 DPU in each GPU server.

- The DPU speaks to ICMS flash enclosures using “NVMe-oF and object/RDMA.”2 The latter is presumed to be S3-over-RDMA.

- The DPU exposes an API into the GPU server (the host) that allows applications to access data stored on any ICMS flash enclosure within the superpod.

- Applications interact with a shared pool of KV cache that is distributed across ICMS enclosures.

- The low-level host API appears to be DOCA’s new “KV communication and storage layer.”

- NIXL probably layers on top of this DOCA interface.

- Dynamo’s KV Block Manager likely layers on top of NIXL.

This is fundamentally a disaggregated storage architecture, whereby persistence (ICMS flash enclosures) are controlled by logic (BlueField-4 DPU cores) that lives on the other side of an RDMA fabric.

Physical implementation

At Jensen’s CES presentation, he explained,

“Behind each one of these [black boxes in the racks adjacent to the NVL72 racks] are four BlueFields. Behind each BlueField is 150 TB of context memory. And for each GPU, once you allocate it across each GPU, will get an additional 16 TBs.”

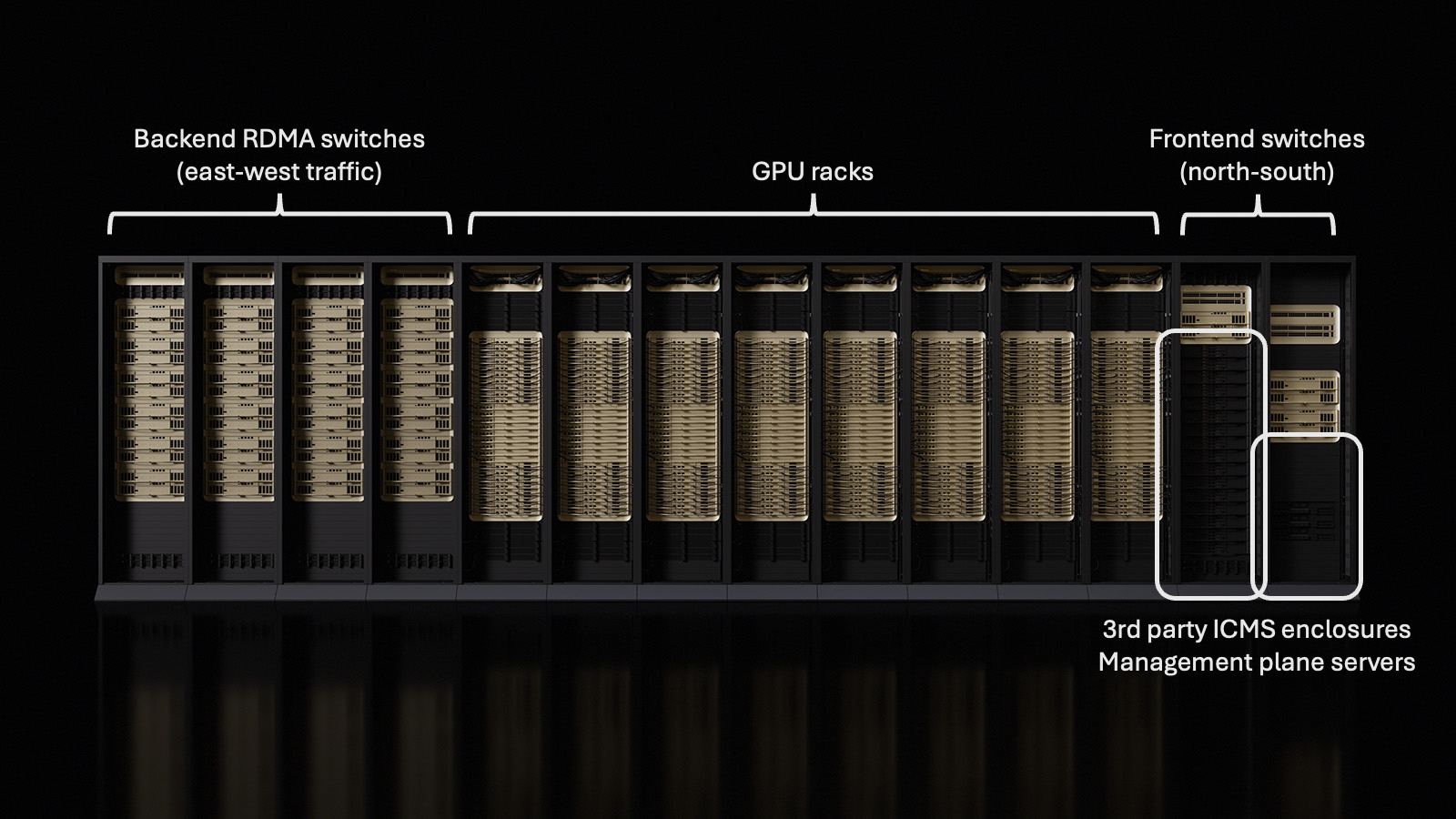

NVIDIA released the following image of a SuperPOD which Jensen explained as follows:3

- The leftmost four racks are Spectrum-X CPO switches for the backend RDMA fabric.

- The middle eight racks are VR200 NVL144 racks.

- The next rack to the right contains Spectrum-X CPO switches for the frontend “AI-optimized RDMA fabric” and a stack of ICMS flash enclosures (which are black and impossible to see).

- The next rack to the right contains more CPO switches for the frontend and miscellaneous stuff for the SuperPOD’s “management plane.”

The enclosures are hard/impossible to see because they are black; this is a notable statement that NVIDIA does not want to gold-label the storage component of this SuperPOD and is leaving it up to third party storage providers to implement this.

Jensen described the ICMS flash enclosures as having 4x BlueField-4 and 600 TB of storage. Incidentally, this aligns exactly with the hardware specs of the AIC F2032-G6 JBOF, which was announced at the same time as ICMS.4

Given that Jensen said:

- A SuperPOD will have 1,152 R200 GPUs

- Each GPU will have 16 TB of ICMS available to it

- There are 32x15.36 TB NVMes per F2032-G6 ICMS enclosure

A SuperPOD will have a total 18,432 TB of ICMS spread across 36x 2U enclosures.

Software implementations

NVIDIA named many launch partners,1 but the accompanying press statements offered no insight into how ICMS will be implemented in practice by third parties. Only VAST Data offered any substantive details about how they integrate into this architecture.5

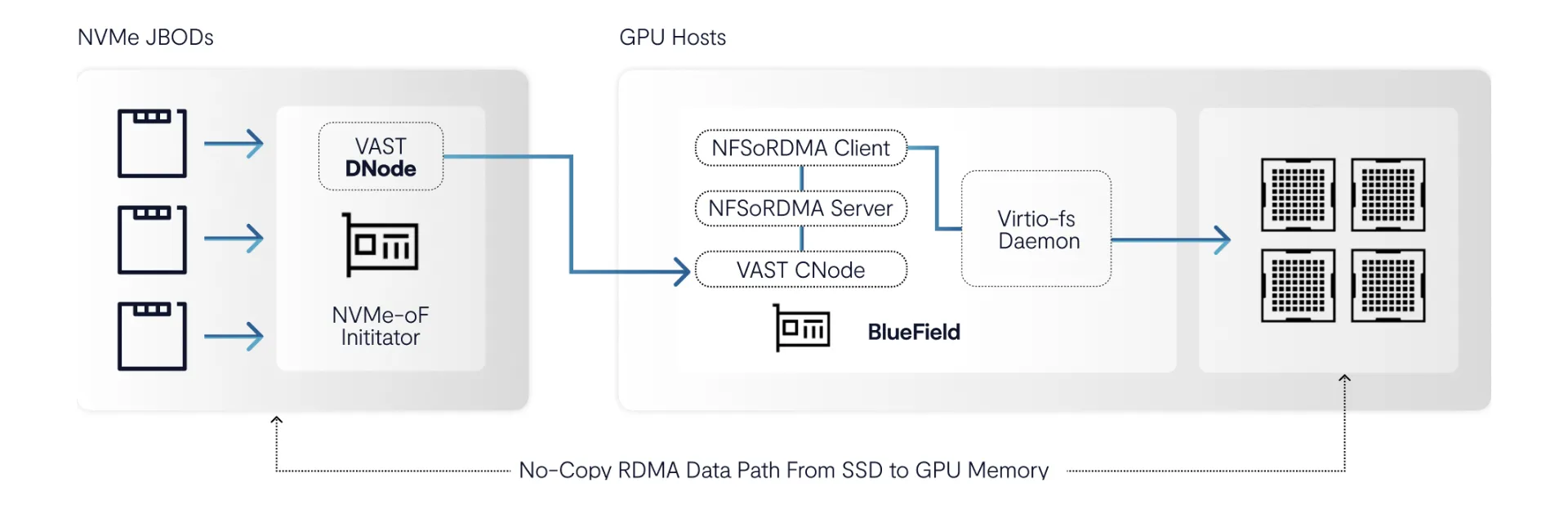

Specifically, VAST described an implementation whereby their CNode software runs inside the BlueField-4 inside each GPU server, enabling zero-copy from a remote SSD to GPU memory, and virtio-fs provides the control-plane interface into the GPU host. This suggests that ICMS will still use a file interface here. This is detailed below.

VAST implementation

Since VAST seems to be the only ICMS software partner with a plan, it’s worth preserving here:6

From this, we can assume the following:

- There are NVMe JBOFs (the “ICMS flash enclosures”) in racks somewhere, likely hanging off of the same TOR switches as the GPU servers. They are likely in separate physical racks, since there is neither free space nor available power in Oberon racks. These look like standard VAST DBoxes, which already use self-hosted DPUs.

- BlueField-4 DPUs in every GPU server provide control-plane logic that transform storage targets (NVMe-oF targets in VAST’s case, but object-over-RDMA in the future?) into a unified namespace that NIXL can access. In VAST’s case, this is where the CNode software will run.

- NIXL in the host interfaces with a file interface, exposed through virtio-fs that is running on the DPU, to authenticate I/Os between the ICMS devices and the host application. This is different from NVIDIA’s proclamation that DOCA exposes the KV API for ICMS.

- Data movement is actually zero-copy from remote storage, through the DPU, into GPU HBM.

This makes sense given the way VAST is architected. The only inconsistency with NVIDIA’s description2 is that DOCA is the host interface into ICMS according to NVIDIA, but virtio-fs (file) is the host interface into ICMS according to VAST.

WEKA implementation?

WEKA could elect to run its server (and client?) inside the BlueField-4 and expose an interface via virtio-fs as well, but it is unclear what advantage this would offer. WEKA already runs its clients and servers inside the GPU hosts in its hyperconverged mode (Augmented Memory Grid), but this mode also exploits locally attached SSDs.

If WEKA were to use remote NVMe targets from the ICMS enclosure, most of the benefits of its hyperconverged mode would be lost. Even though a WEKA client could feasibly talk to its colocated WEKA server (I assume WEKA is smart enough to do this), the WEKA server would still have to perform its block and erasure code traffic over the network. What’s worse, this traffic would flow over the GPU nodes’ frontend networks, which is antithetical to the value proposition of WEKA’s hyperconverged mode using the fast backend fabric to coordinate data transfers.

Assuming the hyperconverged mode is not used, WEKA relies on its flash being closely managed by dedicated CPUs to provide low latency. This implies that a WEKA ICMS solution would require the “ICMS flash enclosures” to be CPU-rich, effectively turning this into a separate WEKA cluster that is simply attached via the GPU cluster’s frontend network below a network taper. It is unclear what good the DPUs would play in such a case.

Other implementations?

There may be other ways to effectively run something like Lustre in this configuration, but it isn’t clear to me how much benefit would be offered. I could envision:

- Dumb JBOFs (ICMS flash enclosures) serve up individual, unprotected SSDs to BlueField-4 DPUs as NVMe targets

- A full Lustre OSS runs inside the DPU and manages the NVMe-oF targets as OSTs

- A Lustre client also runs inside the DPU to mount the namespace. This is not how anyone runs Lustre outside of test environments.

- Use virtio-fs to expose the Lustre client mount from the DPU into the GPU host.

There would be no architectural benefit of colocating the Lustre client and Lustre server in the same DPU, since clients have no way to prefer reading/writing data from locally attached OSTs. I/Os would have to hop between DPUs before hopping to NVMe targets, and this hopping would happen over the slow frontend network.

Non-implementations

The other ICMS launch partners only made vague claims about ICMS or BlueField-4:

- Cloudian drew a thematic connection, but offered no integration with BlueField-4.7

- DDN claimed they use BlueField-4 for “metadata processing, telemetry, and control-plane operations.” No indication of how it supports ICMS.8

- Dell simply stated it is “developing storage solutions designed to unlock and amplify the capabilities of NVIDIA BlueField-4.”9

- IBM mades a vague claim that “When integrated with IBM Storage Scale, BlueField-4 provides efficient sharing of KV cache.” No indication of how this integration happens or how this aligns with ICMS.10

- Nutanix simply states, “Nutanix will also support the NVIDIA Inference Context Memory Storage Platform with the NVIDIA BlueField-4 storage processor.”11 Unclear what this may mean, but it sounds like an API compatibility statement.

- WEKA draws a thematic connection, but offers no integration with BlueField-4. It is simply “aligned with WEKA’s Augmented Memory Grid.”12

DIY implementations

The KV cache use case is simple enough that rolling one’s own simple ICMS software to fit the ICMS architectural model wouldn’t be hard. It would be conceptually straightforward to use a distributed hash table that is spread across the client-side DPUs to track cached KV objects.

Since this is all cache, no resilience or data protection features would be required since values can be recomputed. In addition, KV vectors are immutable by definition, so write-once, ready-many semantics are all that would be required. This means that no fancy locking or delegations would have to be implemented. The trickiest parts would be around fault detection.

It would not surprise me if NVIDIA implements a basic version of such an ICMS software directly in Dynamo’s KV Block Manager.

Prospects

Although Jensen defined a precise definition of ICMS flash enclosures (4x BlueField-4 DPUs and 600 TB of NVMe per unit), it is unclear whether third parties can propose other ICMS enclosures and/or software and still claim the ICMS brand. If ICMS is truly prescriptive and must be BlueField-4 JBOFs + BlueField-4 clients,

- AIC has a lock on the entire market for hardware here. As far as I know, nobody else makes this sort of JBOF.

- VAST Data has a lock on the entire market for commercial software here. As far as I know, VAST is the only company using AIC’s BlueField-equipped JBOFs,13 and they also already ported their CNodes to run on client-side BlueFields.14

However, I think DIY implementations are simple enough that this would be the predominant mode for scale deployments at major model training labs.

Footnotes

-

https://nvidianews.nvidia.com/news/nvidia-bluefield-4-powers-new-class-of-ai-native-storage-infrastructure-for-the-next-frontier-of-ai ↩ ↩2

-

https://developer.nvidia.com/blog/introducing-nvidia-bluefield-4-powered-inference-context-memory-storage-platform-for-the-next-frontier-of-ai/ ↩ ↩2 ↩3

-

AIC Expands NVIDIA BlueField-Accelerated Storage Portfolio With New F2032-G6 JBOF Storage System to Accelerate AI Inference ↩

-

More Inference, Less Infrastructure: VAST and NVIDIA in Action - VAST Data ↩

-

More Inference, Less Infrastructure: How Customers Achieve Breakthrough Efficiency with VAST Data and NVIDIA ↩

-

How Ephemeral AI Storage Saves Cost and Increases Performance ↩

-

Dell and NVIDIA Expand the Horizons of AI Inference | Dell ↩

-

Accelerating NVIDIA Dynamo with IBM Storage Scale and NVIDIA BlueField‑4‑Powered Inference Context Memory Storage Platform ↩

-

Nutanix Accelerates Agentic AI Time to Value with the NVIDIA Rubin Platform ↩

-

The Context Era: AI Inference & Augmented Memory Grid - WEKA ↩

-

VAST Data launches Ceres storage drive enclosure (blocksandfiles.com) ↩

-

VAST Data turns BlueField3 DPUs into storage controllers for Nvidia GPU servers (blocksandfiles.com) ↩