Introduction

File system I/O is a major challenge at extreme scale for two reasons:

- a coherent and consistent view of the data must be maintained for data that is typically distributed across hundreds or thousands of storage servers

- tens or hundreds of thousands of clients (compute nodes) may all be modifying and reading the data at the same time

That is, a lot of parallel reads and writes from a distributed computing system need to be coordinated across a separate distributed storage system in a way that delivers high performance and doesn't corrupt data.

I/O forwarding, perhaps first popularized at extreme scales on IBM's Blue Gene platform, is becoming an important tool in addressing the need to scale to increasingly large compute and storage platforms. The premise of I/O forwarding is to insert a layer between the computing subsystem (the compute nodes) and the storage subystem (Lustre, GPFS, etc) that shields the storage subsystem from the full force of the parallel I/O that may be coming from the computing subsystem. It does this by multiplexing the I/O requests from many compute nodes into a smaller stream of requests to the parallel file system.

Implementations

This page is under construction and currently serves as a dumping ground for interesting notes on emerging I/O forwarding technologies. Some of the most successful, promising, and interesting I/O forwarding systems include

CIOD - Blue Gene's I/O forwarder

My understanding is that the compute node kernel forwards all I/O-related syscalls to the I/O node where they are executed by the Console I/O Daemon (CIOD). This is used on BG/L, BG/P, and BG/Q. A good paper describing the Blue Gene I/O forwarder was presented at SC'10.

IOFSL

IOFSL is an implementation developed by the I/O wizards at Argonne whose genesis is in ZOID, which was an open-source reimplementation of Blue Gene's CIOD.

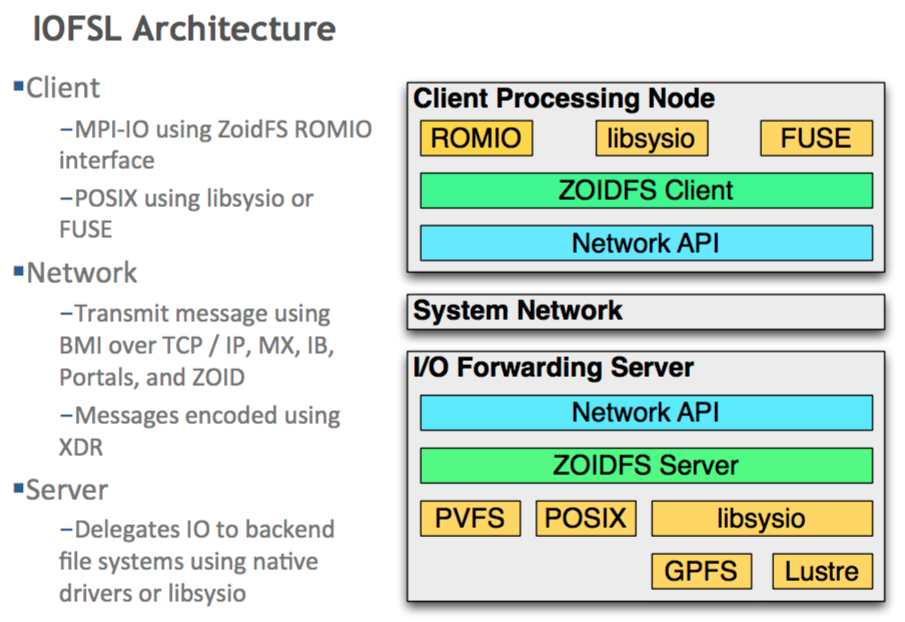

The IOFSL Architecture

The above diagram describes how the client and server side of IOFSL are laid out and was taken from a good slide deck on IOFSL presented in 2010.

It relies on the interface provided by libsysio, originally a component in the Catamount kernel, to provide a POSIX-like view of a forwarded file system to an application. Behind the scenes, sysio translates POSIX I/O calls to filesystem-specific API calls (e.g., liblustre or libpvfs) or a user-space function shipping mechanism. The IOFSL paper (Ali et al, 2009) provides a great deal of detail on how it all operates.

IOFSL was more of an experimental/research platform than a production tool and is no longer funded or developed to my knowledge.

Cray Data Virtualization Service (DVS)

Rather than a kernel module that performs syscall function shipping, DVS is a Linux VFS driver that provides a POSIX-like mount point on the client. Client I/O to this DVS file system is forwarded to one (or more, in the case of cluster parallel mode) DVS servers who act as clients for an underlying file system.

Intel IOF

Intel is developing an I/O forwarding service as a part of the CORAL system being deployed at Argonne. It is not yet a product, but its source code can be browsed online.

v9fs/9P

v9fs is the Plan 9 remote file system and its protocol. Several features including its support re-exporting mounts and support for transport via RDMA have given it traction as a mechanism for I/O forwarding.

Useful links:

- Grave Robbers from Outer Space: Using 9P2000 Under Linux - the paper that describes the 9P protocol's implementation within Linux, 9P2000.L

- v9fs: Plan 9 Resource Sharing for Linux - a more candid version of the v9fs man page for Linux that discusses its history and benefits over NFS and CIFS

NFS-Ganesha

NFS-Ganesha, developed by CEA, includes a 9P server implementation and has been used to perform I/O forwarding of Lustre.

Useful links:

- NFS-Ganesha: Why is it a better NFS server for Enterprise NAS? - touches on the benefits (and limitations) of implementing file systems in user-space

diod

This is an implementation of a v9fs/9p server that includes extensions to facilitate I/O forwarding.

Useful links:

- I/O Forwarding on Livermore Computing Commodity Linux Clusters - a really nice review of I/O forwarding principles and design goals. Unfortunately incomplete, but still very useful.

- I/O Forwarding for Linux Clusters - an early slide deck from LLNL describing diod as an I/O forwarder

Relevant Transport Protocols

I/O forwarding ultimately relies on an underlying network transport layer to move I/O requests from client nodes to the back-end storage servers, and the routing that may be enabled by these network transport layers may themselves behave like I/O forwarders.

- Lustre LNET is the transport layer used by Lustre, and LNET routers typically forward I/O requests from many Lustre clients to a much smaller group of Lustre object storage servers (OSSes). Incidentally, Cray DVS (see above) uses LNET for its transport, but does not use LNET's routing capabilities.

- Mercury is an emerging transport protocol for high-performance computing that competes with (or plans to supercede) LNET and DVS.

- Mercury actually replaces Lustre's RPC protocol, where Lustre RPCs are what are actually carried by LNET. However Mercury includes its own network abstraction layer which can be used instead of LNET.

- Mercury itself does not include an I/O forwarding system, but I/O forwarding can be built on top of Mercury. For example, - IOFSL (see above) can use Mercury instead of its original BMI protocol - Mercury POSIX was a proof-of-concept forwarding service built on top of Mercury to perform POSIX I/O function shipping - Intel's IOF uses Mercury for transport.

- BMI is the network transport layer used in PVFS2.

Additional Information

- Mercury paper in IEEE Cluster (2013) - the paper presenting Mercury and its design